Machine learning is one of the major goals of the more general term of artificial intelligence. Earlier this year I read about so called “DeepFake” videos on the popular German computer science news service Heise. While the entire topic in that article is a bit sleazy, I was intrigued by the possibilities of that technology.

Basically, the “DeepFake” technology uses existing machine learning / deep learning algorithms to create models that can manipulate data in a certain quite predictable way. If you input a headshot photo of a random person, that “model” can make that person look like a very specific person. That sounds very abstract.

An example:

If I take a photo of myself, the “Nicholas Cage” model can make me look like Nicholas Cage!

In the case of those DeepFake videos, an entire video scene was processed by a certain model and basically made the actor look like a very specific different actor. So as a result, you would get a video where the actor looks very different from the one who was shot in the original film.

Real-Time is Key

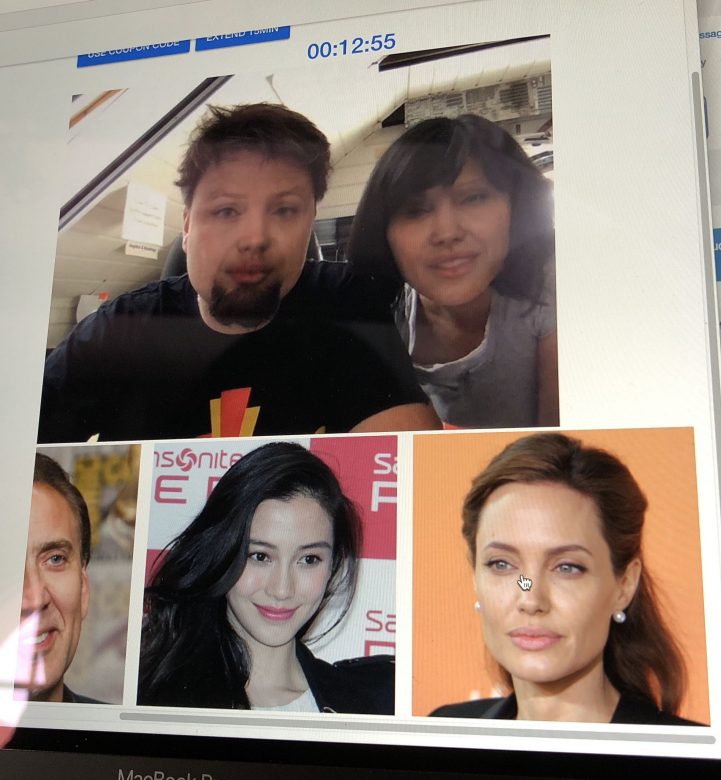

For quite some years we’ve been working with streaming video for our Sky Drone products, which come in many different flavors. As a result, we have a bit of experience on how to stream video real-time. Then we thought further on how that technology of “deep faking” could work with real-time video chat. As a result, we used all the technology knowledge at hand and created a small web service that allows you to video chat with yourself. Yet, you won’t see your face, but the one of a person you choose to be. This is how it looks like:

The small website is not fancy nor is it easy to use. It just works — if you use it the right way. If you’d like to try it yourself, you can follow the instructions we posted on Reddit.

TRY HERE: Reddit – Video Live-Stream DeepFake Testing

Here is a video of a user in India who gave it a try:

How it works

You can see yourself through the webcam of your computer. That video is being streamed to a cloud computing cluster we operate. On that cluster, the “AI model” for the selected person is being applied. The resulting video is then being streamed back to your computer and shown on the screen. As a result, your face looks quite different from your actual face.

You can try to smile for example, and the model will modify the smile to a smile that celebrity would do. This includes the kind of teeth that celebrity has, the mouth and lip shape, etc.

For example, this is how it looks like when we smile while being Nicolas Cage:

Of course, a photo of a computer screen is not that nice and it does especially not show how movement in the video shows you as a “different person”. Yet, it can at least give you an idea on how that might be. Feel free to give it a try yourself.

The Future

We have some ideas on how this can be used in future – for legitimate purposes, of course. As I don’t want to spoil your thoughts … What do you think this can be used for?