I previously wrote about how and why I was migrating away from Hosteurope to Amazon AWS. The basics steps sounded very simple:

- Backup databases and data directories on the old server

- Set up the new server including all software & services needed

- Restore all backups to the new server

- Change DNS setting to apply changes to all visitors

Sounds easy, right?

Everyone who’s done that kind of thing before, knows it’s never quite that easy. Doing the first step is quite straight forward. Not a big deal. For the second step I ended up using a combination of howtos to get everything set up properly:

- Official AWS EC2: Linux+Apache+MySQL/MariaDB+PHP

Tutorial: Install a LAMP Web Server on Amazon Linux 2 - Official AWS EC2: WordPress

Tutorial: Hosting a WordPress Blog with Amazon Linux - Working CloudFront Config for WordPress:

Setting up WordPress behind Amazon CloudFront - General WordPress on t2.nano Instance:

How I made a tiny t2.nano EC2 instance handle thousands of monthly visitors using CloudFront

My main goal was to run the smallest instance AWS has (currently t3.nano) which costs around $3.75 per month. It turned out, MariaDB (and for that also MySQL) does not start up properly at the nano instance with just 512MB of RAM. Therefore, I had to go at minimum with the t3.micro instance.

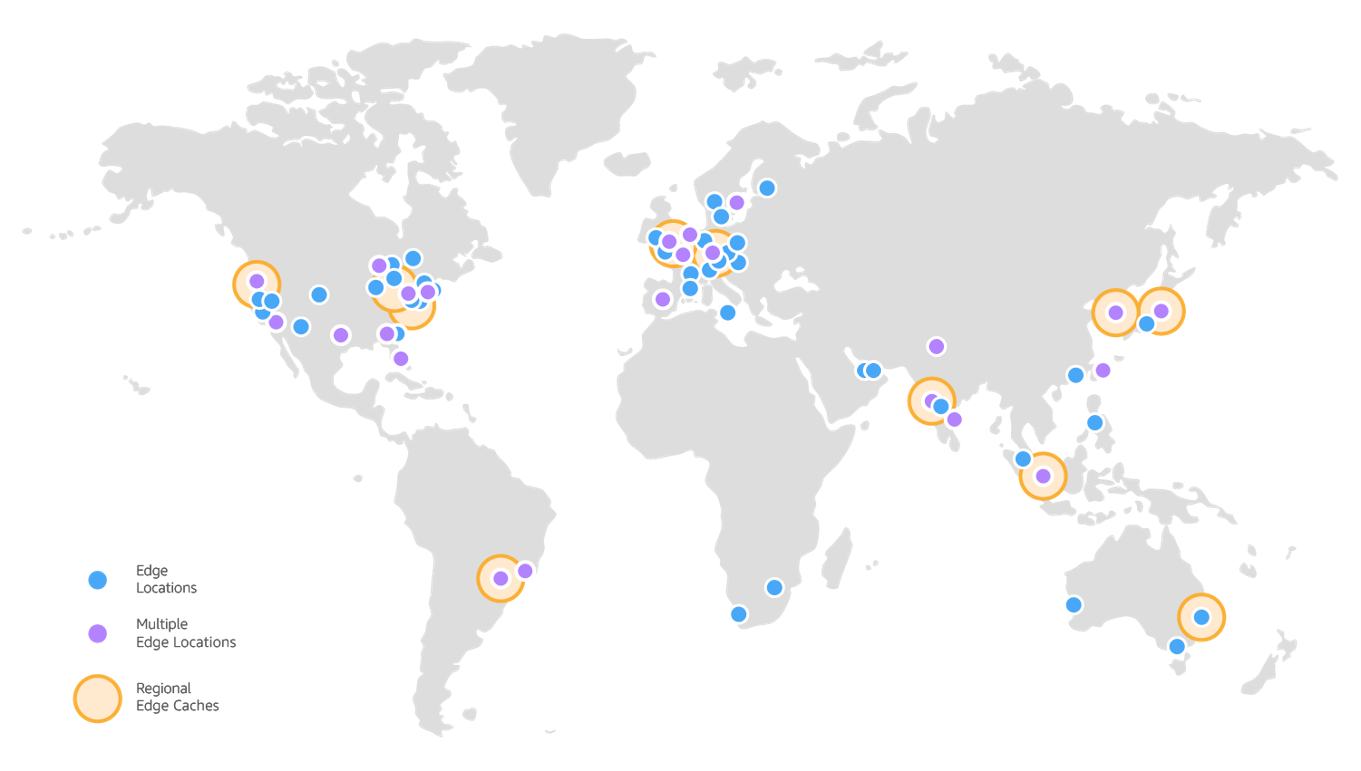

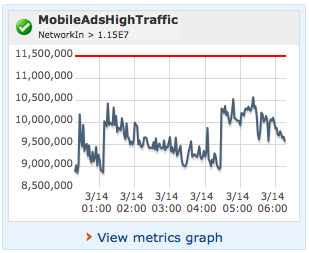

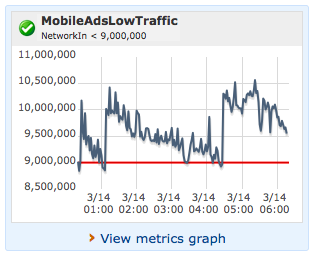

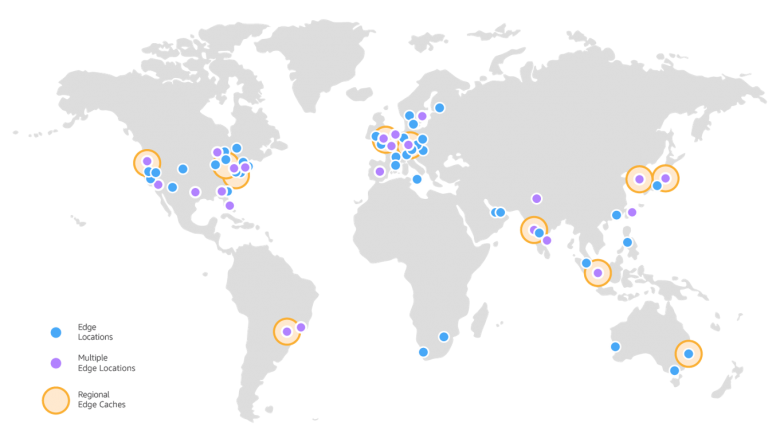

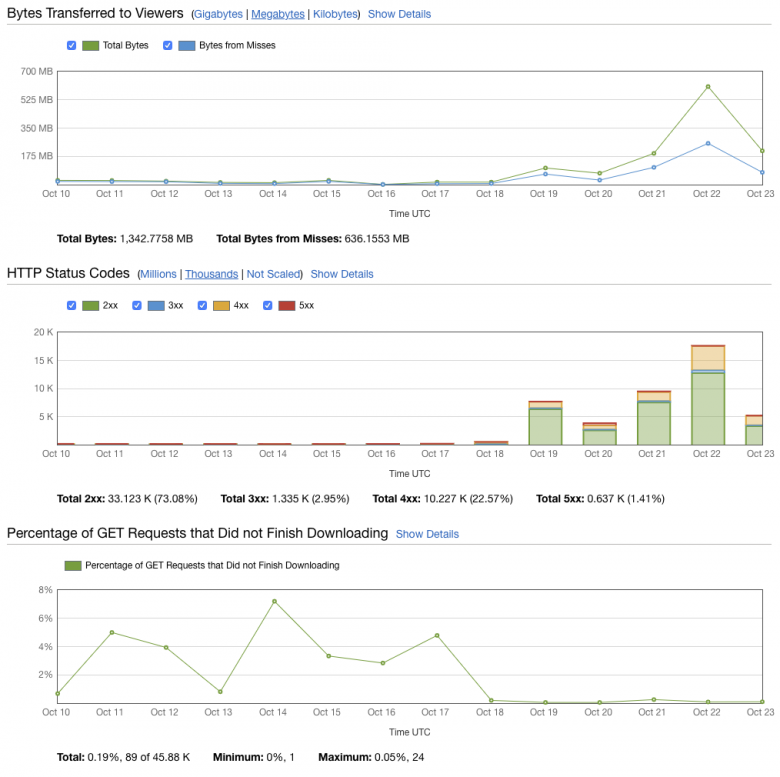

To ensure that load spikes are being handled properly and the server will not go offline during these peak times, a content distribution network (CDN) comes in handy. The great thing about AWS is that they’ve thought of all these scenarios and of course they’ve got a CDN solution ready to deploy. It’s called CloudFront. The tricky part here is, to have CloudFront kick in at the right time, because it caches content to deliver it from its own edge locations across the globe, but at the same time WordPress generates websites dynamically. So CloudFront needs to be able to work in that environment. Setting up CloudFront properly was the part that cost most time, but it works great now.

To ensure that load spikes are being handled properly and the server will not go offline during these peak times, a content distribution network (CDN) comes in handy. The great thing about AWS is that they’ve thought of all these scenarios and of course they’ve got a CDN solution ready to deploy. It’s called CloudFront. The tricky part here is, to have CloudFront kick in at the right time, because it caches content to deliver it from its own edge locations across the globe, but at the same time WordPress generates websites dynamically. So CloudFront needs to be able to work in that environment. Setting up CloudFront properly was the part that cost most time, but it works great now.

I have now deployed CloudFront with multiple sites all running on the same t3.micro instance. One by one I activated for CloudFront distribution and over the past couple of days the traffic handled by CloudFront is going up continuously.

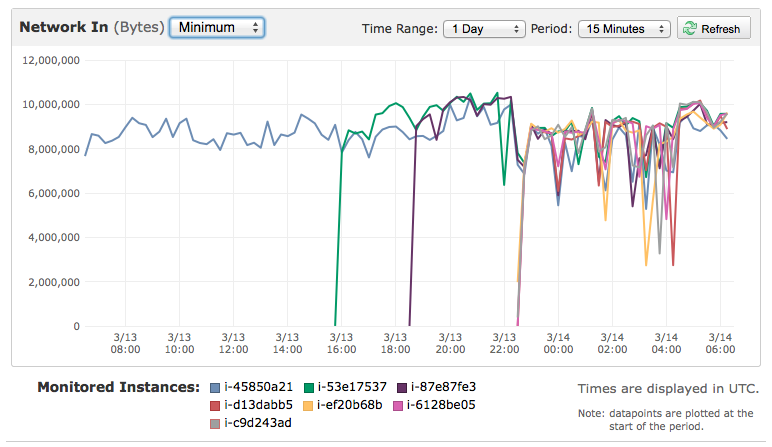

To run massive load tests I used Apache JMeter for the first time. It’s a monster when it comes to load testing and it took me about an hour to get it running the first time. You can literally configure everything on there.

To run massive load tests I used Apache JMeter for the first time. It’s a monster when it comes to load testing and it took me about an hour to get it running the first time. You can literally configure everything on there.

As it is when you’re setting up new things, you’ll have many “new things” you’re working with. In my case, it was the first time I used MariaDB, which is a fork from MySQL. It was also the first time I worked with php-fpm, which “is an alternative PHP FastCGI implementation with some additional features useful for sites of any size, especially busier sites”.

As it is when you’re setting up new things, you’ll have many “new things” you’re working with. In my case, it was the first time I used MariaDB, which is a fork from MySQL. It was also the first time I worked with php-fpm, which “is an alternative PHP FastCGI implementation with some additional features useful for sites of any size, especially busier sites”.

So far I’m quite happy with the current set up. Let’s see how this performs over time. My sites are constantly under attack from bots who are trying to figure out passwords and gain access otherwise to those sites. Yet, every site and server can and will eventually go down under just enough Denial of Service attacks. At the current attack level we’re doing OK, but let’s see how long this lasts and what adjustments I’ll have to deploy.