![]()

I am usually not the kind of guy who likes to boast with numbers, but in this case I believe it helps to put things into perspective. For over seven years I am in the mobile app business targeting consumers directly. Over the years we have created thousands of products, most of them paid, some completely free and some with ads.

For years, I did not believe in monetization of apps through advertising. Even today, I am still very skeptical about that because you need to have A LOT of ad requests per day so that any significant income can be generated from that. At the moment, our few (around 10) mobile applications that sport ad banners, generate up to 165 million (165,000,000) ad requests per day. That makes up to 5.115 billion ad requests per month. If you compare that to a fairly large mobile advertising company like Adfonic with their “35 billion ad requests per month” (src: About Adfonic) we are doing quite alright for a small app company. We are using a mix of different ad networks depending on what performs best on which platform. Besides that we are breaking it down into country/region to use appropriate ad provider that is best for that region. Part of that also involves ad providers who do not support certain platforms natively. So built server-side components to handle such ad requests in a “proxy” kind of way that still allows us to get ads shown in apps on a certain platform that is not officially supported by the ad provider. Basically, we have a mini website that just shows an ad; and we can have hundreds of thousands of mobile devices accessing this website from all over the world at the same time.

Using cloud service providers like Amazon AWS, Rackspace, SoftLayer, Microsoft Azure, or others any can serve a virtually unlimited number of requests these day. It all depends on your credit card limit. There are obviously usage patterns of programs of applications and over the course of the day, we have ups and downs. For example, at midnight GMT most people all over the world like to use our applications and therefore they request more ads. Five hours later, we experience the lowest traffic. Depending on application store release schedules, promotions, featured listings, user notifications, external promotions like on blogs or elsewhere, unexpected sudden spikes in traffic can occur any time. “Automatic Scaling” in combination with “Load Balancing” seemed to be the magic solutions for this.

After months of running a bunch of server instances behind a load balancer we were quite happy with the performance. It was easy for us determine usage patterns and see how many servers we need to serve the average maximum number of requests without our service to fail. We didn’t think that much about cost-optimization because our cloud computing bills weren’t that high; so we didn’t really work on such auto scaling components. That had two main disadvantages: Firstly, we spent more that we needed to as we probably didn’t need half of the server that were running while we had low traffic. Secondly, we were not prepared for sudden massive spikes in traffic. With TreeCrunch on the other hand, we are building a scalable system from the ground up.

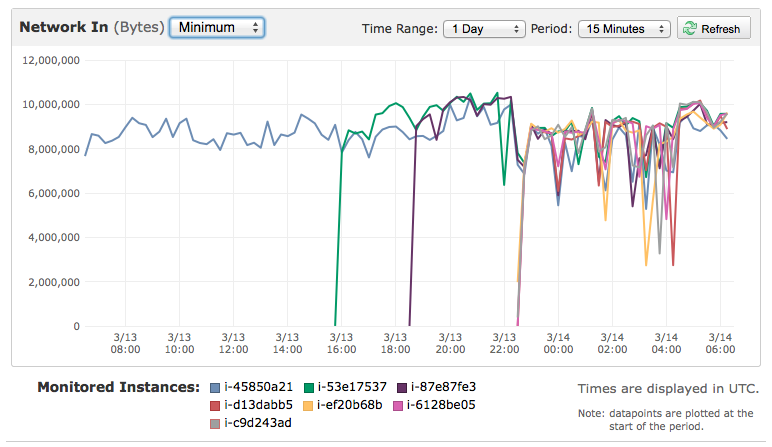

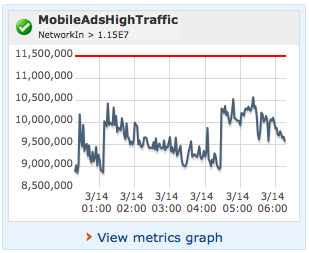

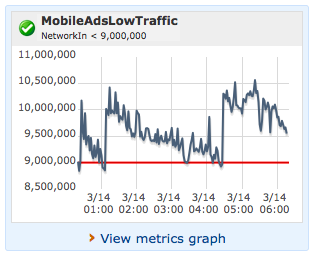

So early this week I took three hours and looked into how to implement such auto-scaling. Actually, it took me two hours to install the tools properly. However, as you can see in the above charts, the current systems works in a way that if we hit a maximum amount of average traffic per server, a new server is being created and added to our load balancer — it then immediately starts serving ads. If the average traffic falls below a certain threshold, a server is being terminated and therefore stops serving ads. To minimize server load, we run really tiny PHP scripts that are extremely optimized just for the purpose of requesting an ad the desired ad company and delivering such ad to the client (mobile app). As web server we are currently using lighttpd which is very lightweight indeed. Interestingly, we noticed that there is not really a problem with “normal” system resources to handle a lot of requests. Our CPU usage is fairly acceptable (constantly around 50%), we don’t need any hard drive space as we are just proxy’ing requests and we don’t even run out of memory. The first limitation one of our ad servers hits, is the maximum number of used sockets which is by default around 32,000 (or something like that) on Debian-based linux. That’s more or less an artificial limitation by the operating, but we didn’t play with adjusting that one yet.

Summary: In a fairly short period of time, we managed to set up a proper auto scaling policy that allows to scale up to virtually unlimited numbers of ad requests. With fairly low budgets companies nowadays can setup proper data centers, serve millions of users and maintain their infrastructure with a few very talented people and without purchasing any hardware that would be obsolete a year or two later.

I love the time I am living in. Every single day something new and exciting pops up.

Greetings,

I read this article and wanted to ask, what would be a good solution to serve 1 million simple HTTP GET requests a minute? Most of the time the requests will be answered from a cache, thought at times the cache itself might be changing very frequently as well. Replies will be JSON encoded data, hardly 1 KB per reply.

I was looking into using Amazon DynamoDB, but I am not sure if I really need to do it, since I prefer to use my existing PHP/MySQL setup. However not sure what hardware to use, or what Amazon service to use.

Since you are more experienced in this, any suggestions would be highly appreciated.

Hi Zeeshan,

That very much depends on what kind of data you want to serve. If it is just static data that does not change very frequently, you could store such files on Amazon S3 and pass it through to Amazon CloudFront for high reliability, super-fast delivery and transparent caching in a datacenter that is close to the requesting party.

If your data is more dynamic (i.e. pulled from a database by a script) it also very much depends on which kind of data operations you expect. The standard (old-school) approach would be to have a (my)SQL master/slave setup where multiple slaves handle read (SELECT) requests and only a few or even just one instance handles write requests. However, this puts you in front of different maintenance problems with keeping all those SQL servers in sync, maintaining proper backups, security fixes, etc.

If your data is very simple, Amazon SimpleDB might be something worth looking into. It can (apparently) scale unlimitedly in matter of size and is based on key-value kind of architecture which is very much similar to NoSQL databases. However, as the name implies, this solution provides only quite “simple” data structures.

If your data is more complex, yet you are expecting A LOT of data, Amazon DynamoDB is definitely a great service to look at. There are dozens of great NoSQL databases, but Amazon was one of the first companies defining strategies of storing and accessing large amounts of data consistently, frequently and in the most efficient way. It comes with features like guaranteed read/write capacity which can be adjusted dynamically to your system’s current needs; data storage exclusively on SSD drives for super-fast access as well as data redundancy.

In any case, as soon as you have a database involved you might need a severside component (like PHP you mentioned) that allows you to write and read data to and from the database — whichever you are using. If you have that involved, you need a load balancer (like Amazon Elastic Load Balancer ELB) that distributes requests to your server instances to handle database operations. Make your serverside scripts as light weight as possible, use the most light weight web server you can find and make sure no other unneeded services are running on those servers.

There are many many things to consider. So to cut the answer short: it all depends on what kind of requirements and data you want to serve. Though, you have been quite specific, there are still some variables that can have huge effects on your system’s performance 🙂

Nice and interesting article. Your approach and infrastructure is so much different from what I am working with.